Comic Strip - An intersection of art and AI

Communication with machines has always been effortless and limited to conscious and direct forms, whether it’s something as simple as turning on the lights or as complex as programming. We only had to give a command to a machine for it to do something for us.

On the flip side, communication between people is far more complicated but a lot more interesting because we take into account much more than what is explicitly expressed. We observe facial expressions, body language, and understand feelings and emotions from our dialogue with one another. However, interpretation of facial expressions is not absolute, and each person interprets them by his or her experience and knowledge. But such interpretations are prone to misunderstandings and gaps!

To curb the gaps, our team’ Comic Strips’ offered an excellent solution that has the capability of understanding not only the dialogues but emotions too and presenting the entire conversation in the form of ‘Comic Strip.’

The Idea:

The application ‘Comic Strip’ can be treated exactly like a comic artist to whom you would narrate your story, and give your photo so that he can create a comic from your story and photo. The app ‘Comic Strip’ too would draw a comic strip of the story with each Strip depicting the emotions of people involved in the conversation.

There are various phases right from face detection to creation of Strip in the App, let us understand each in detail:

Phase 1 – Face Detection Engine:

It is a frontal face detector based on a Histogram of Oriented Gradients (HOG) and linear SVM. It identifies the face in the same way we human beings do but on a grand algorithmic scale.

How it works?

Facial Detection Engine identifies the geometry of face; key factors include the distance between eyes and the distance from forehead to chin. The software identifies facial landmarks — one system identifies 81 of them — that are key to distinguish a face. However, it is essential to eliminate backgrounds to get a clear picture. This engine is based on deep learning and leverages open CV libraries.Phase 2 – Cartoon Engine: A cartoonization engine is created, which is divided into three modules:

Facial features: The first engine synthesizes facial features, specifically eyes, nose, eyebrows, and lips, with stylization to give cartoonish features.

Facial outline: This would create a 2 D Vector mesh of the face

Hair Detection: This would identify the hairstyle of the person and apply customization to it.

How it works?

The output of these three engines is aligned. Face parts such as hair, eyebrows, facial hair, and colors are classified and synthesized (generated) onto the mesh in an appropriate style to create a cartoon character. The 81 points identified are used to determine the significant characteristics of the face- eyes, nose, lips, and chin.

With a single photo, one neural network builds a 2D mesh of the user’s head. Then the mesh goes through the automatic stylization process that makes it look more cartoonish. Afterward, it is used as a basis of the cartoon’s head.

Next, other neural networks swing into action. Face parts such as hair, eyebrows, facial hair, and colors are classified and synthesized (generated) onto the mesh in the appropriate style.

Phase 3 – NLP Processor: There is also a Sentiment analysis NLP engine running in parallel. This is used to determine the emotional tone behind words to gain an understanding of the attitudes, opinions, and emotions expressed. It is also known as opinion mining, deriving the opinion or attitude of a speaker.

It’s known as sentiment analysis or emotion AI, and it involves analyzing views – positive, negative, or neutral – from written text to understand and gauge reactions. The processor is trained with ML against an algorithm of almost 5 million records.

Phase 4 – Facial Emotions Engine: How it works: An image matrix is created with the 81 points identified in the facial recognition engine. Training for these applications requires large datasets of facial imagery, specifically eyes and eyebrows, with each displaying a discrete emotion — the more labeled images, the better.

The output of the engine would be to produce different cartoon images expressing diverse emotions from the conversation.

Phase 5 – Comic Creator: This is more like an organizer to bring the texts and several cartoon images and to create those comic bubbles. The final step is to bring the text and the cartoons together in a comic strip format.

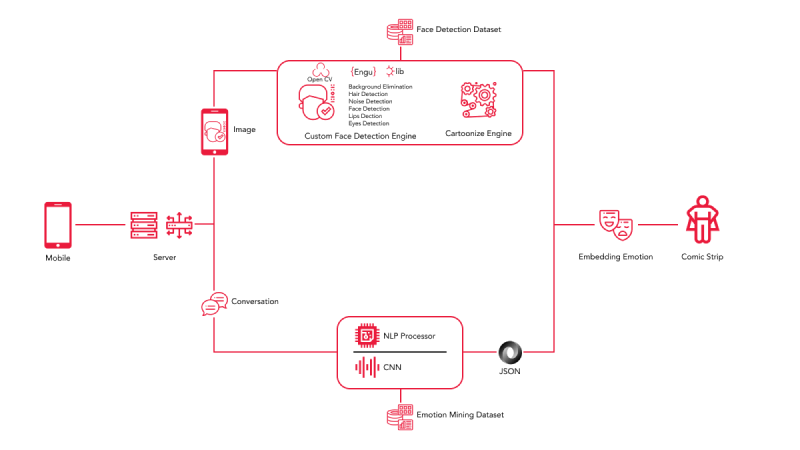

The Architecture

Technology:

- OpenCV

- Engu

- NodeJS

- Machine Learning

- NLP

The Application:

- Messaging chat as a Comic Strip!

- Learning apps using Comic Strip to make learning more interactive

- Create comic posters to create public awareness on familiar topics

- Storyboarding

- Visual learning and communication

A quick wrap-up: Through Comic Strip, our team’s vision is to introduce a whole new realm of human interaction into human-computer interaction so that computers can understand not only what you direct it to do, but it can also respond to your facial expressions and emotional experiences. Comic Strip is a wonderful application, and with its use, it is sure to revolutionize the entire virtual communication.

Watch the demo video here